What is Sensor Stream Pipe?

https://github.com/moetsi/Sensor-Stream-Pipe

Moetsi's Sensor Stream Pipe (SSP) is the first open-source C++ modular kit-of-parts that compresses, streams, and processes sensor data (RGB-D). It does this by efficiently compressing raw data streams, allowing developers to send multiple video types over the network in real time. Frame data can be sent in its raw form (JPG/PNG frames), or compressed using a myriad of codecs, leveraged on FFmpeg/LibAV and NV Codec to considerably reduce bandwidth strain.

SSP is designed to help overcome the limitations of on-device sensor data processing. By taking data processing off device, you will be able to run far more powerful computations on your sensor data and make the most of the tools at your disposal.

The Moetsi Sensor Stream Pipe is designed to overcome the limitations of on-device sensor data processing. It does this by encoding and compressing your device’s sensor output (like color or depth frames), and transmitting them to a remote server where they can be decoded and processed at scale.

Currently, Moetsi’s Sensor Stream Pipe supports:

.mkv (matroska) RGB-D recordings (these are created using Azure Kinect's recorder utility)

Live Azure Kinect DK RGB-D/IR sensor streams

Computer vision/spatial computing datasets (e.g. BundleFusion, MS RGB-D 7 scenes and VSFS)

iOS ARKit data (streams ARFrame data)

Features include:

Synchronized streaming of color, depth and IR frames

Support for Azure Kinect DK (live and recorded video streaming) and image datasets (e.g. BundleFusion, MS RGB-D 7 scenes and VSFS) and .mkv (matroska) files

Hardware-accelerated encoding (e.g. Nvidia codec), providing you with the lowest possible latency and bandwidth without compromising on quality

Interoperability with Libav and FFmpeg creates a hyperflexible framework

Access to the calibration data for each of the sensors on the Kinect, enabling you to build a point cloud from the color and depth images, perform body tracking, etc.

But why though...?

If you have 4 sensor streams and want to do an environment reconstruction using their data feeds

If you have a couple of sensors and want to find where they are relative to each other

You want to run pose detection algorithms on a dozen sensors and synthesize the results into a single 3D model

Basically if you want to do any spatial computing/computer vision on multiple incoming data streams

You can use Sensor Stream Server to send compressed sensor data to reduce bandwidth requirements and Sensor Stream Client to receive these streams as an ingestion step for a computer vision/spatial computing pipeline.

If you want to synthesize RGB-D+ data from multiple feeds in real-time, you will probably need something like Sensor Stream Pipe.

Problems?! (shocker)

Reach out on our discord and we will get you going!

Component parts

The ssp_server is the frame encoder and sender.

"Frames" are a sample of data from a frame source. For example, the Azure Kinect collects: RGB (color), depth, and IR data. If we want to stream RGB-D and IR, we sample our frame source (the Azure Kinect), and create 3 frames, one for each frame type: 1 for color data, 1 for depth data, and 1 for ir data. We then package these 3 frames as a zmq message and send through a zmq socket.

Grabbing frames is done by the IReader interface. More devices can be supported by adding a new implementation of the IReader interface.

Sensor Stream Server reads its configurations from a yaml file (examples in /configs). The config file provides Sensor Stream Server: a destination for its frames, the frame source (video, Azure Kinect, or dataset), and how each frame type should be encoded.

The ssp_clients are the frame receiver and decoder. They run on the remote processing server and receive the frames from the ssp_server for further processing.

There are a few templates for how you can use Sensor Stream Client in

Sensor Stream Client with OpenCV processing

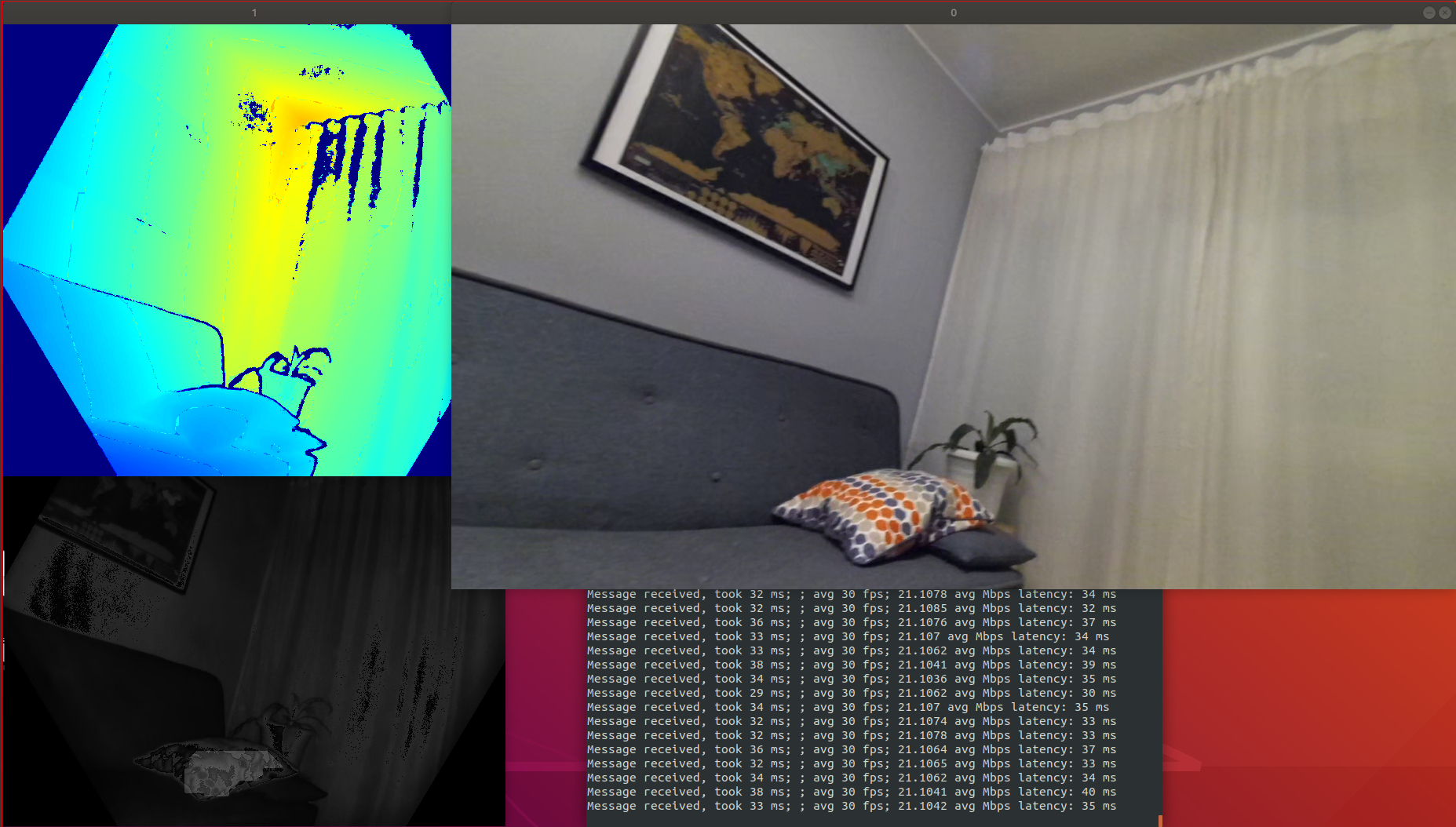

If you run Sensor Stream Client with OpenCV visualization:

You can see it’s receiving real-time data from a Kinect DK and rendering it for on-screen display. In this scenario we achieved a substantial 20x data compression, reducing the stream size from 400 Mbps to just 20 Mbps, along with a PSNR of ~39 dB and a processing overhead of ~10-15 ms 😱.

Sensor Stream Client is built so it can be an ingestion step for a spatial computing/computer vision pipeline.

Sensor Stream Tester

A reproducible tester for measuring SSP compression and quality. You can use this to measure how different encodings and settings affect bandwidth/compression.

Getting started

We recommend going through

Streaming a Videoto get up to speed quickly. You will stream using Sensor Stream Server and receive on Sensor Stream Client a pre-recorded RGB-D+ stream to get a quick feel of what Sensor Stream Pipe does.

Moetsi’s Permissive License

Moetsi’s Sensor Stream Pipe is licensed under the MIT license. That means that we don’t require attribution, but we’d really like to know what cool things you’re using our pipe for. Drop us a message on [email protected] or post on our forum to tell us all about it!

Future Work

Support for ARCore

Support for Quest 3

Support for VisionPro

Updating so Zdepth can be used with iOS devices

Authors

André Mourão - amourao

Olenka Polak - olenkapolak

Adam Polak - adammpolak

Last updated